Managed Upload Creeate New S3 Instance 1 Hour Session Cognito

Upload Files To AWS S3 From React App —Using AWS Amplify

Amazon has made meaning progress in creating tools, frameworks and libraries that finally give developers the true sense of focusing on the code and make them more resilient rather than beingness bogged down with infrastructure concerns. Once notable library which has made neat progress is AWS Dilate.

What existed every bit 1-off solutions for developers to integrate their mobile and web apps with AWS services (via MobileHub) is at present a more cohesive solution in the form of AWS Dilate, and with AWS re:Invent 2018 the introduction of Amplify Panel is just icing on the cake.

In this post though, we are going to look at how to utilize AWS Amplify to permit users upload files to S3 buckets from a React app. The use case is simple — authenticate users of your React app from the browser through Cognito and allow them to upload files to an S3 bucket. I'll be honest, in that location's quite a fleck of configuration that is involved, but nothing that can't be done, and then let'due south get to it.

Authentication Using Cognito (a pre-requisite)

You can skip this section if y'all already have a Cognito User Puddle and Identity Pool gear up-up.

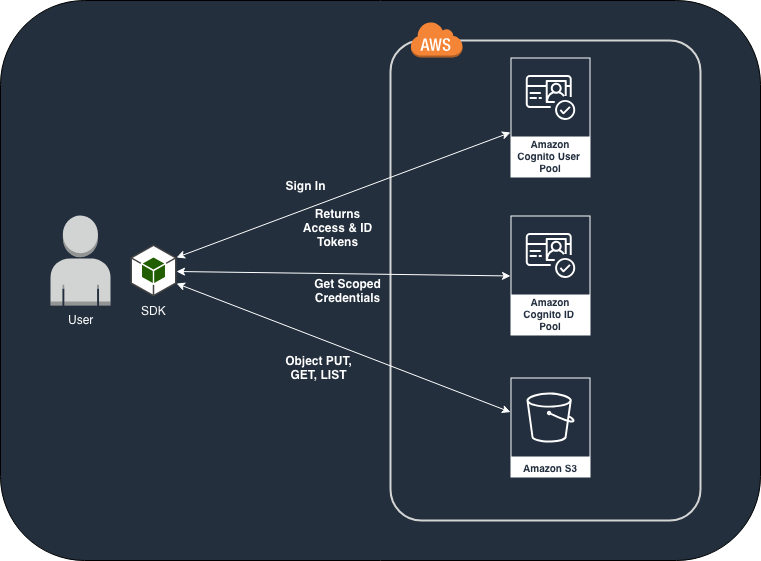

Before we go into the details of implementing Amplify with React, it's of import to sympathise what role Cognito plays in this entire process. If yous didn't already know, Cognito makes information technology like shooting fish in a barrel for you to let your users sign upward/sign in to your app and enables you lot to manage their access control to your AWS services (such as in this example, S3). For the sake of this article it'south important to understand the two functions of Cognito-

- Cognito User Pool: User pools allow you to setup how your users are going to sign-up and sign-in to your app (i.e. using email, or username, or telephone number or all of them), it permit's you define countersign policy (eg. minimum viii characters), define custom user attributes, enable MFA (multi factor hallmark), and and then on. You can cheque out this webinar on how to create a user pool (or there are plethora of articles online that will assist you lot get started). Once, a user pool is created, it should provide yous a "Cognito User Pool ID" (eg.

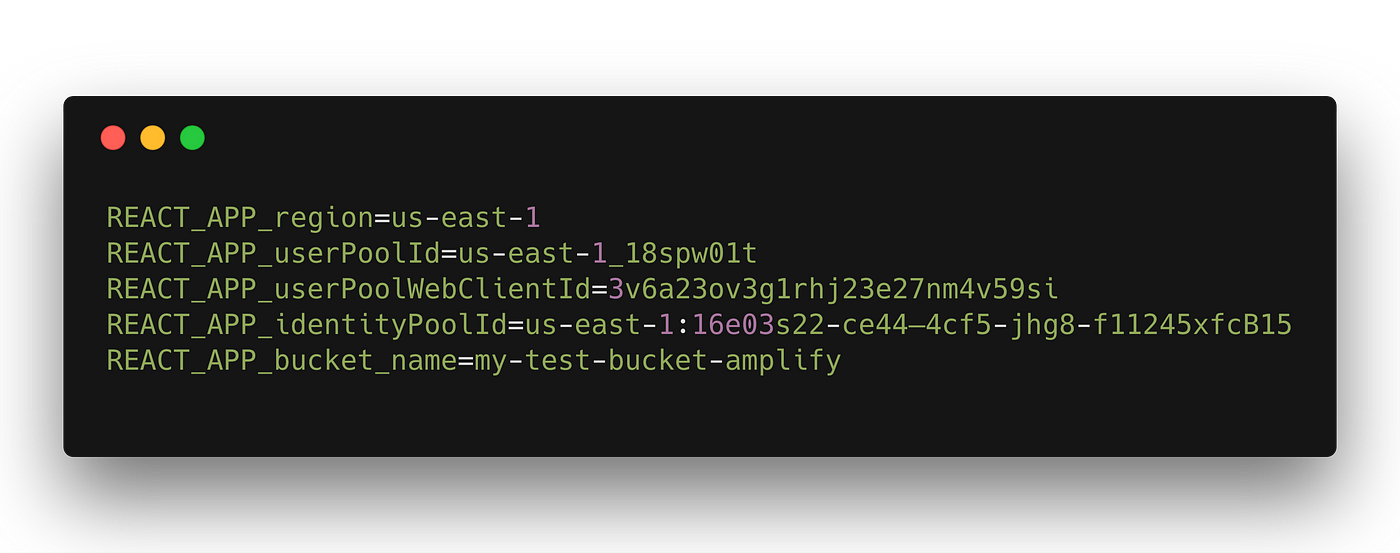

the states-east-1_18spw01t) and an "App client ID" (eg:3v6a23ov3g1rhj23e27nm4v59si). We will need both of these after. - Cognito Identity Pool: Technically, a User Pool lone is enough to setup a basic authentication service with Cognito for your app. However, if you want your users to have fine grain admission to other AWS Services, or maybe integrate 3rd political party Hallmark providers such as Google, Facebook, Twitter, SAML etc. you will need to setup a "Federated Identity" using Cognito Identity Pool (CIP). CIP lets yous assign IAM roles at authenticated and unauthenticated levels which basically dictates what services (or parts of services) tin can a user access if they are authenticated vs. unauthenticated. Simply like a user pool a CIP volition take its own ID (eg:

united states of america-east-1:16e03s22-ce44–4cf5-jhg8-f11245xfcB15). Nosotros volition keep a note of this as we volition need it later.

In one case nosotros have Cognito ready to go nosotros tin can move on to the next section.

Configure S3 permissions in Cognito

One time we have our User Puddle and Identity Puddle ready, we demand a way to add together permissions to the Identity Pool which will give our users the power to perform S3 operations (like PUT, GET, Listing etc.). When a user authenticates using the Cognito Identity Puddle, their identity would "assume" the IAM part that we assigned to the identity pool and they tin can then perform the allowed operations on S3.

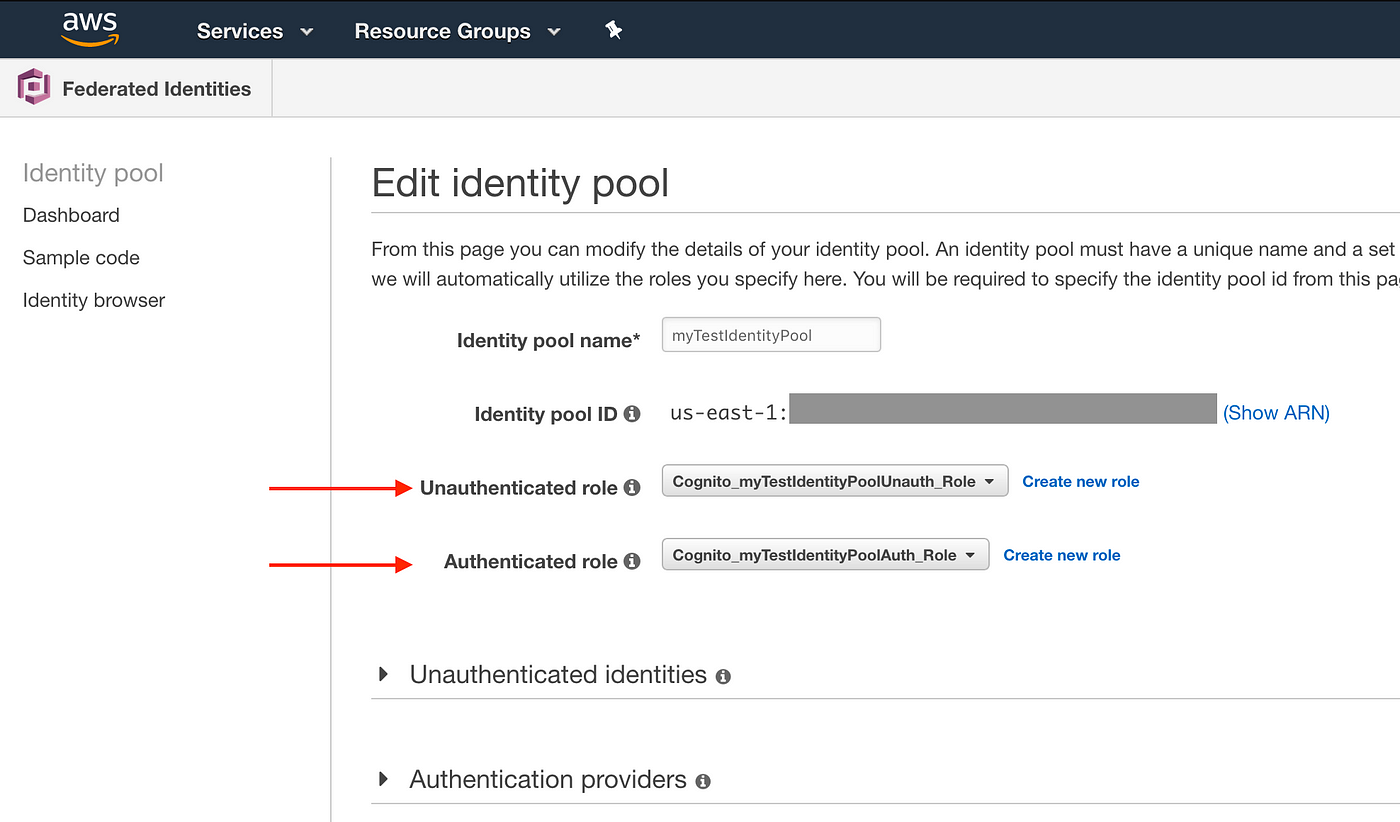

Identity Pool allows yous to add 2 types of roles (IAM Roles).

- Authenticated Office : For when the user is in authenticated state.

- Unauthenticated Role: For when the user is in unauthenticated state.

Nosotros will demand to assign individual IAM Roles to both even though we are only going to use the Authenticated Role since we want our users to only be able to upload files if they are authenticated.

Head over to Cognito console and click on the "Manage Identity Pool" from the homepage. This should take you to the identity pool manager. On the next page select your identity pool and then "Edit identity pool" link on the height right hand corner.

When you create an Identity Pool, 2 roles will be created for you by Cognito. One for "Unauthenticated Role" and another for "Authenticated Office" every bit shown in the paradigm above. We will need to alter these roles using IAM console to add together S3 permissions. Head over to IAM to edit the roles.

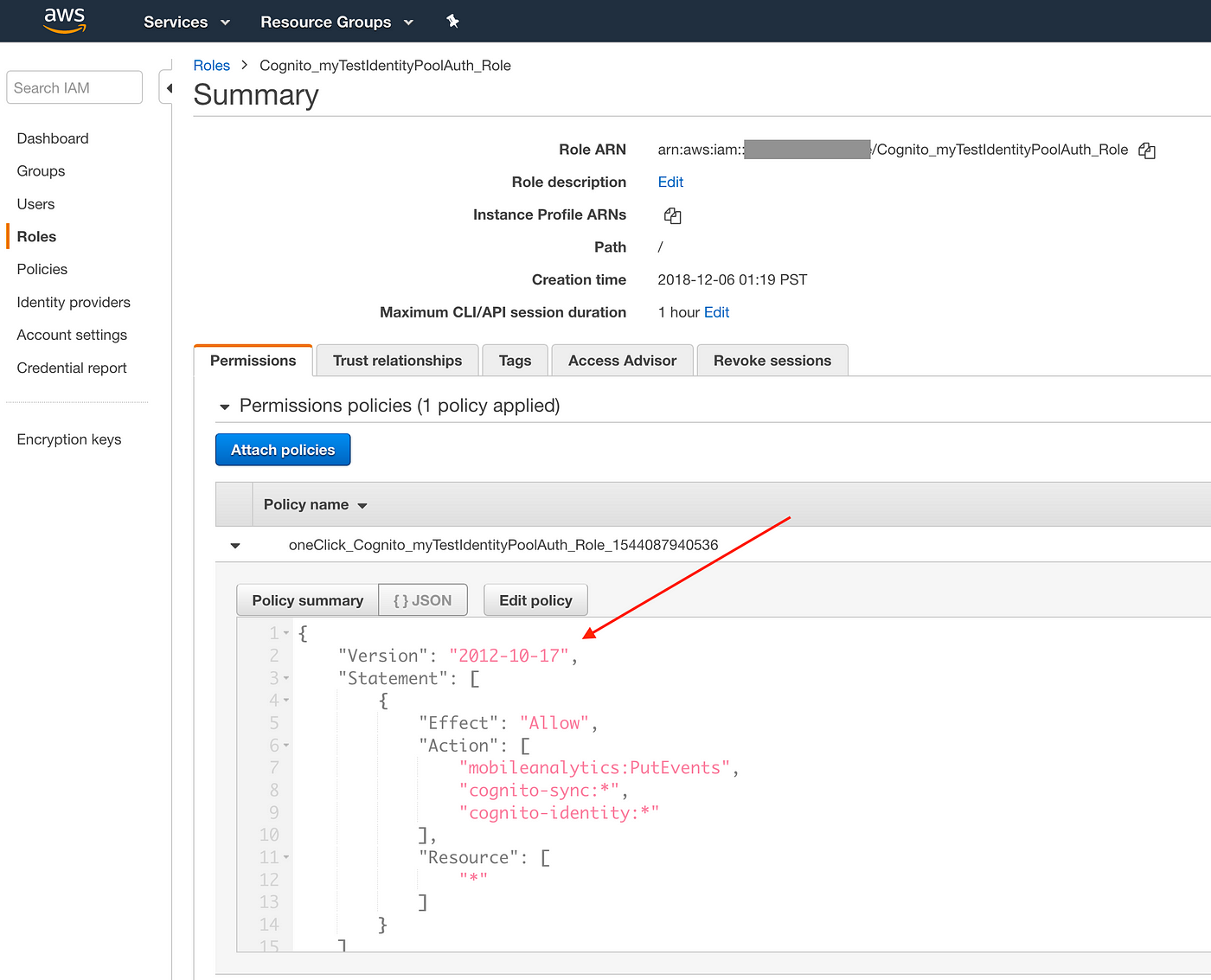

We volition edit the roles using the policy editor and use the policy json below. We will assume our S3 saucepan proper name is "my-test-bucket-amplify".

We volition repeat this stride for the unauth role, however for unauth function make sure you remove the DeleteObject and PutObject permissions. Everything else remains the same as the policy json above. We volition discuss about public/, protected/, and private/ prefixes , as seen in the json above, a fiddling bit farther downwards.

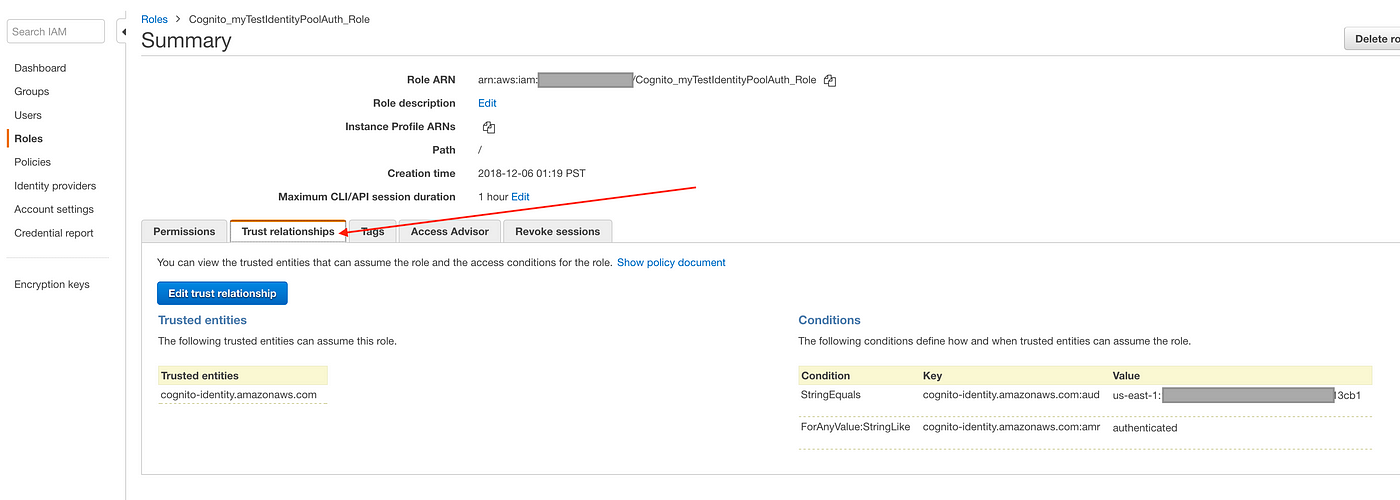

We will also need to make sure that the "Trust Relationships" for both the roles are set correctly. Click on the "Trust Human relationship" tab and then "Edit trust relationship".

IMPORTANT: Make certain that the cognito-identity.amazonaws.com:aud is your Cognito identity pool id and cognito-identity.amazonaws.com:amr is authenticated for the authenticated function and unauthenticated for the unauthenticated role.

Once both the IAM roles are edited as shown above, they volition enable authenticated users to perform S3 operations.

Setting Up Amplify

We will install AWS Amplify'south Javascript SDK (amplify-js). Hither's the Github repo for the SDK and hither's the documentation.

Annotation: Amplify provides some average React components (HOCs) which can be created using the Amplify CLI. We will not be using Aplify CLI, just instead do our ain implementation using the modules within amplify-js.

We will employ a create-react-app boilerplate to become started. Let'southward create a CRA projection and name information technology amplify-s3. Nosotros will likewise install amplify-js into our project.

$ create-react-app amplify-s3

$ cd amplify-s3

$ npm install aws-amplify --save Now that nosotros have dilate-js installed, we need to make employ of the Storage sub-module for S3 related operations (and Auth sub-module for Cognito authentication related operations).

Let'due south configure Amplify with the Cognito user pool, identity pool data, and the S3 bucket information. In order to do so, we volition create a services.js in the root of the project and put all the Amplify Auth/S3 related operations there and then that nosotros tin re-use information technology throughout our app. In services.js the first thing to practice is to initialize and configure Amplify —

All the pieces of the configuration information above is mandatory in order to use Storage sub-module for S3 and hallmark (Auth) operations . Note the process.env.REACT_APP_* variables. These are surround variables that are declared within of a .env file in the root of your project. CRA has inbuilt implementation of DotEnv and then we can take advantage of that without having to type in the values over again and once again. The .env file'southward content will expect like this and nosotros covered each of these values in the Cognito section.

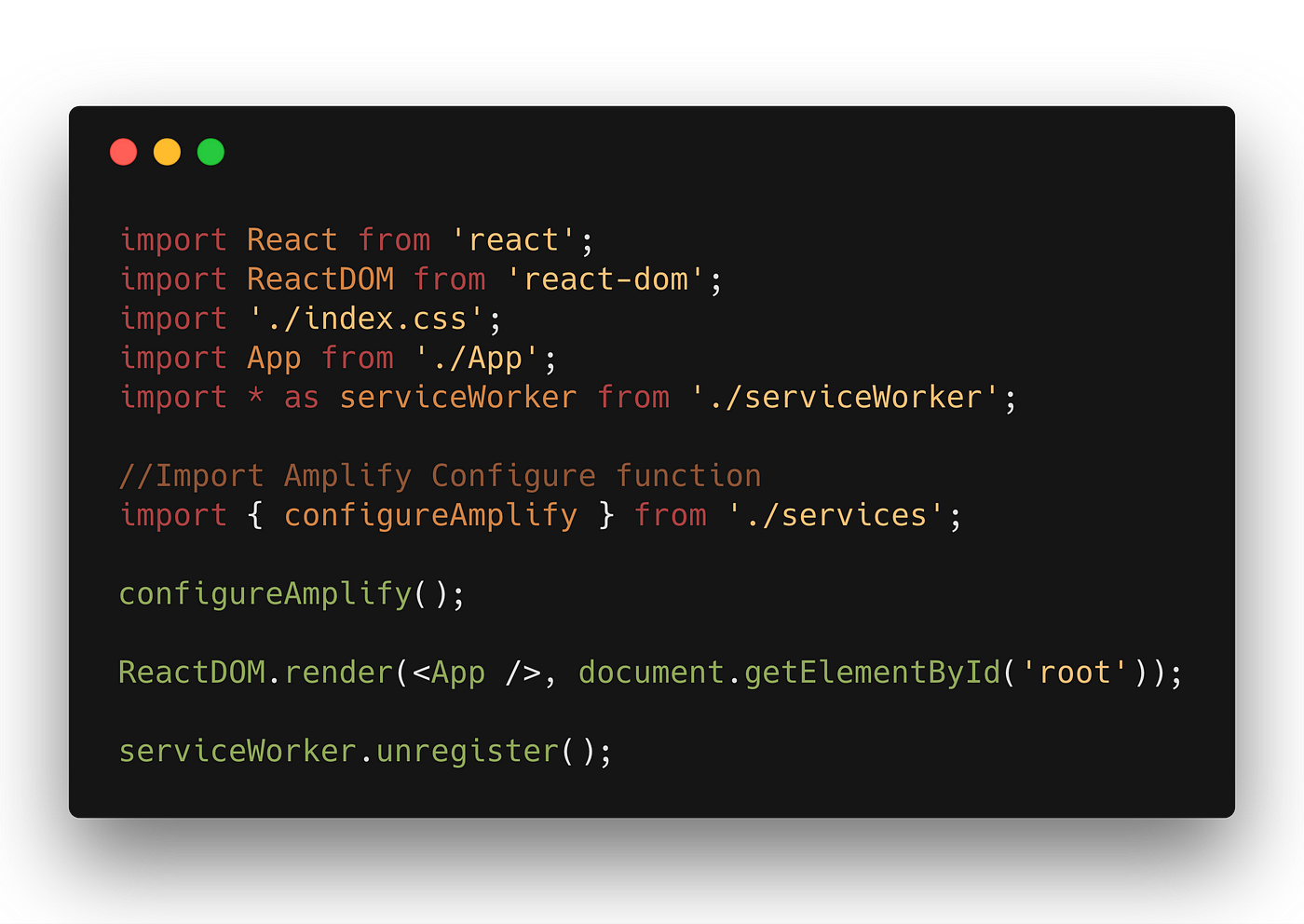

We demand to perform Dilate.configure when your app is initialized, so nosotros volition demand to import the configureAmplify() function in the index.js file of your project using named import so it would wait like this —

It is important to telephone call the configureAmplify() function before your App mounts. At this bespeak we are good to start using Storage and Auth.

For the sake of this article, we will assume you lot already accept code in place to sign-in your users into your app using

Authsub-module andsignIn()method that is built-in i.e.Auth.signIn()then on. Take a look at the documentation which explainsAuthsub-module usage.

Using the Storage sub-module

We can now apply the Storage submodule in Dilate and outset performing S3 operations. But before we can practice that, in that location is one important shortcoming that nosotros demand to discuss. As of this writing Amplify.configure() supports initializing only a unmarried S3 bucket . This may non be ideal employ instance for everyone, for instance; you may want user images to go to ane bucket and videos to another so on. This could become particularly challenging scenario with the way we configured Amplify above, and unfortunately this is the only fashion to configure Amplify right at present. Just, non all is lost. Let'southward take a wait at how we tin can use multiple S3 buckets in your app.

In order to be able to use different buckets, we need to perform some Storage specific configurations, specifically set the bucket name, level, region and Identity Puddle ID every time you perform an S3 performance. Although this may non be an ideal way to do it, it is the but way to do it. We will add a new role in our services.js file to configure Storage with all these parameters for this purpose. We volition start use modular import to import Storage from Amplify and so performconfigure()—

Let'south discuss what we did here, and what level means. Nosotros simply passed an object to the congenital in configure() method for Storage. Most of the values are familiar, like bucket — bucket's name, region — AWS region, and identityPoolId — the CIP ID. But what does level mean?

The Storage module can manage files with three different access levels; public, protected and private.

-

public: Files with public access level tin be accessed by all users who are using your app. In S3, they are stored under thepublic/path in your S3 bucket. Amplify volition automatically prepend thepublic/prefix to the S3 object key (file name) when the level is prepare topublic. -

protected: Files with protected admission level are readable by all users only writable only by the creating user. In S3, they are stored underprotected/{user_identity_id}/where the user_identity_id corresponds to a unique Amazon Cognito Identity ID for that user. IMPORTANT: Cognito user pool will automatically assign your users a "User ID" (which looks similar:09be2c02–8a21–4f51-g778–05448a6afbb5) and the Identity Puddle assigns users an "Identity ID" (which looks similar:us-east-1:09be2c02–3d0a-400d-a718–4e284dca6de4) and they are different. Theuser_identity_idsignifies the "Identity ID". Amplify will automatically prepend theprotected/prefix to the S3 object key (file name) when the level is set toprotectedand use theuser_identity_idfor the user that is logged into your app and has a valid session. So, give a file name ofmy_profile_pic.jpg, a bucket name ofmy_profile_pics_bucket, and authenticated user Identity ID ofu.s.a.-east-one:09be2c02–3d0a-400d-a718–4e284dca6de4the S3 path volition wait likes3://my_profile_pics_bucket/protected/us-eastward-ane:09be2c02–3d0a-400d-a718–4e284dca6de4/my_profile_pic.jpg -

private: Files with individual access level are merely attainable for specific authenticated users only. In S3, they are stored underprivate/{user_identity_id}/where the user_identity_id corresponds to a unique Amazon Cognito Identity ID for that user.

In our case we desire user's to exist able to store their files under the individual file access level in the S3 bucket. This means that Amplify will always perform the operations under south://your_buck_name/private/{user_identity_id}/.

We can now apply our reusable function in services.js file to configure the S3 Storage submodule past simply passing the bucket name and the file access level like then —

SetS3Config("my_other_bucket","private"); Setting up the S3 bucket

We volition now setup our S3 bucket then that it works with Amplify's Storage sub-module. The Storage sub-module is a convenient wrapper effectually S3's API endpoints (which is besides used by the AWS CLI internally) and abstracts away much of the implementation details from you. This ways that you would actually be making API calls to the S3 endpoints from your React/Javascript code. With that in mind permit us quickly create an S3 saucepan.

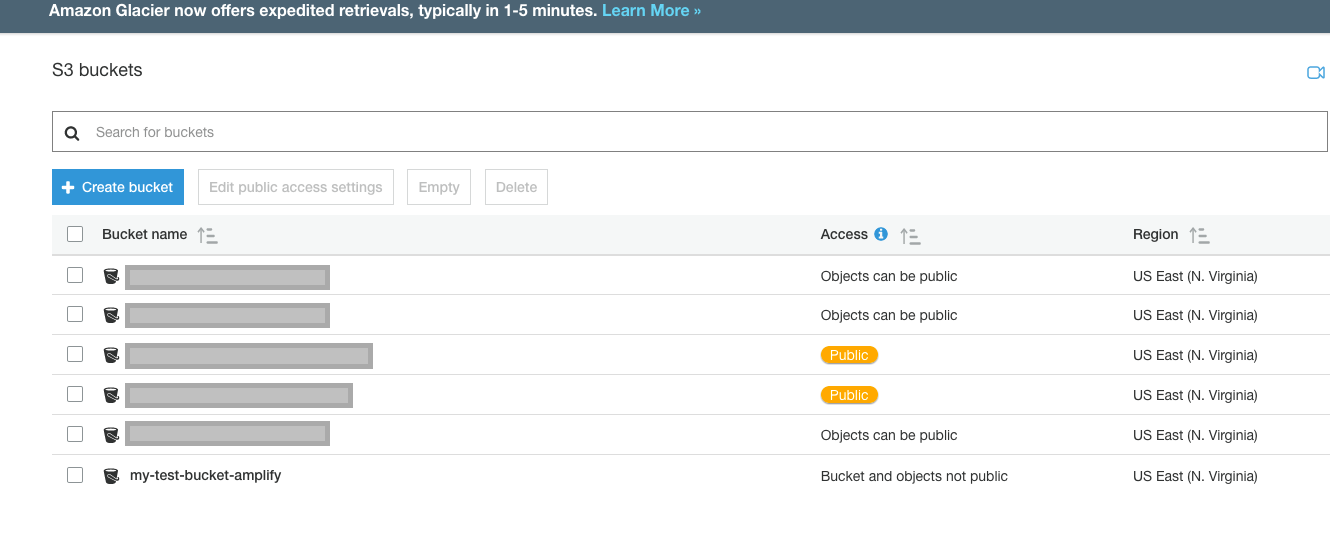

Head over to AWS S3 console and click "Create Bucket". Nosotros will name our bucket "my-test-bucket-amplify".

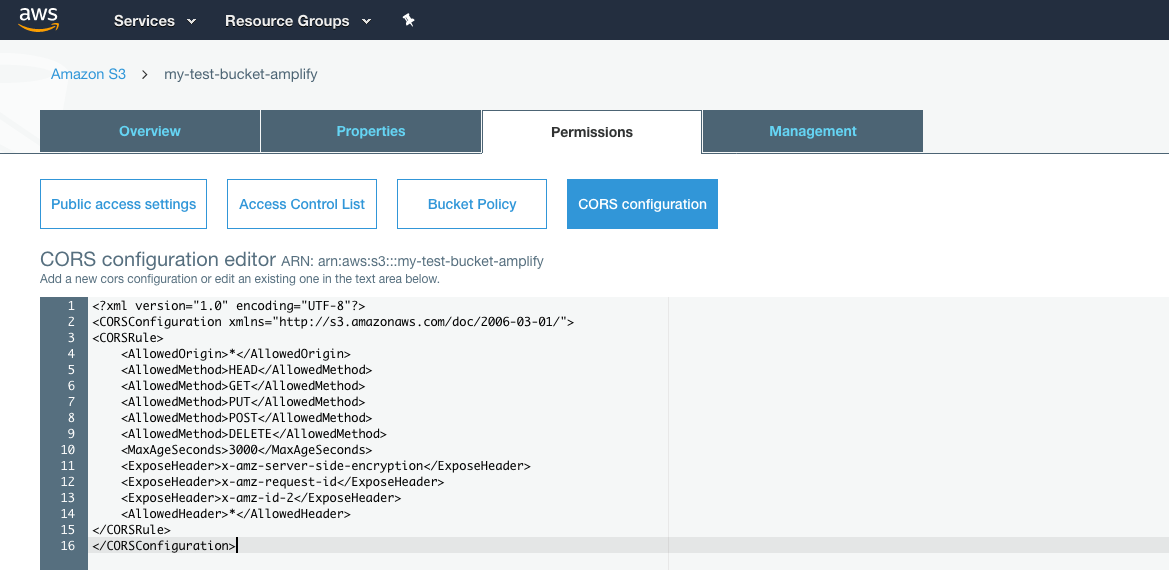

We at present have to enable CORS (Cantankerous Origin Resource Sharing) for this bucket. We need to perform this step since Amplify will interact with this bucket through the S3 Rest API endpoints. In order to exercise that, click on the saucepan proper noun and so go to the "Permissions" tab, then click the "CORS Configuration" button.

In the CORS configuration editor enter the XML below-

<?xml version="i.0" encoding="UTF-8"?>

<CORSConfiguration xmlns="http://s3.amazonaws.com/doc/2006-03-01/">

<CORSRule>

<AllowedOrigin>*</AllowedOrigin>

<AllowedMethod>HEAD</AllowedMethod>

<AllowedMethod>GET</AllowedMethod>

<AllowedMethod>PUT</AllowedMethod>

<AllowedMethod>POST</AllowedMethod>

<AllowedMethod>DELETE</AllowedMethod>

<MaxAgeSeconds>3000</MaxAgeSeconds>

<ExposeHeader>ten-amz-server-side-encryption</ExposeHeader>

<ExposeHeader>x-amz-request-id</ExposeHeader>

<ExposeHeader>10-amz-id-ii</ExposeHeader>

<AllowedHeader>*</AllowedHeader>

</CORSRule>

</CORSConfiguration> This XML configures CORS for the S3 saucepan API endpoint. Yous tin can allow all * domains in the AllowedOrigin or you can list specific domain for example <AllowedOrigin>yourdomain.com</AllowedOrigin> to restrict calls from only your domain. You tin can also select which Rest methods to allow past listing them (or non listing them) with <AllowedMethod>. Learn more than almost S3 CORS configuration here. Thats virtually it for S3, annotation that the bucket remains private by default and we have not added any Bucket policy explicitly since nosotros do not need any for this example. Now information technology'southward fourth dimension to write some React code to run into our upload operation in activeness.

Uploading from React App

We've come a long way, so now information technology's fourth dimension to put some lawmaking into our CRA Project'south index.js file and build a component that can actually perform an upload. Here's how the code would wait like —

Pretty straight forwards. Let'south take a look at what we did at that place.

- We imported

configureAmplify()andSetS3Config()from ourservices.jsfile. These two functions will configure Amplify to initialize the services and aid us set the bucket configurations respectively. - We and then wrote a component with basic

jsxwith an upload field with<input type="file">and a couple of buttons — one to select files from our reckoner and another to upload the selected file. NOTE: the code restricts the file types to png and jpeg usinghave="image/png, image/jpeg"but that's optional. - We save the paradigm file and the image file name from HTML5's FileAPI

files[]assortment (assortment considering you may select multiple files but in our case one one file is allowed), in the state usingsetStateononChangeevent of theinputchemical element. NOTE: The actual upload chemical element (file selector i.e the regular button) is hidden and we have a regular field in that location simply for styling purposes). - In one case we take the file and file proper name in the state we invoke the

uploadImagepart from the button'southwardonClickevent. - The

uploadImagefunction initializes the S3 bucket configuration usingSetS3Configfunction by setting the bucket name ("my-examination-saucepan-amplify") and the file access level ("protected"). - Finally, we call the

Storage.put()function which takes in the object primal (i.e. the file name) with an S3 object prefix (i.e. in this instanceuserimages/), the file and a file metadata object where we passed an object withcontentType. CheckoutStorage.put()example's and documentation here.

Here'south what it looks like —

Conclusion

It's a rather simple implementation of a file uploader, considering you are using Cognito and Amplify, from your app simply it besides leaves quite a fleck to exist desired, for case multipart uploads are non-existent out of the box, and neither is file upload progress subscriber which indicates how much of the file has been uploaded (in guild to show some sort of progress bar to the user while the file uploads), then on. Still, this is a step in the right direction with Amplify and simplifying a lot forth the way of providing simple upload functionality in your web (or mobile apps) for your users.

Source: https://medium.com/@anjanava.biswas/uploading-files-to-aws-s3-from-react-app-using-aws-amplify-b286dbad2dd7

0 Response to "Managed Upload Creeate New S3 Instance 1 Hour Session Cognito"

Post a Comment